How AI is Reshaping Physician Workflows

The healthcare landscape is undergoing a significant transformation, with artificial intelligence (AI) emerging as a pivotal tool in alleviating the administrative burdens that have long plagued physicians.

According to the American Medical Association's (AMA) 2025 Augmented Intelligence Research report, there has been a notable shift in both the adoption and perception of AI among healthcare professionals in the last few years. More physicians are viewing AI not as a replacement for clinical expertise, but as a partner in reducing the invisible workload that pulls attention away from patient care. The technology is evolving from theory to practice—moving from pilot projects to daily workflows that meaningfully save time and improve decision-making. Key takeaways below.

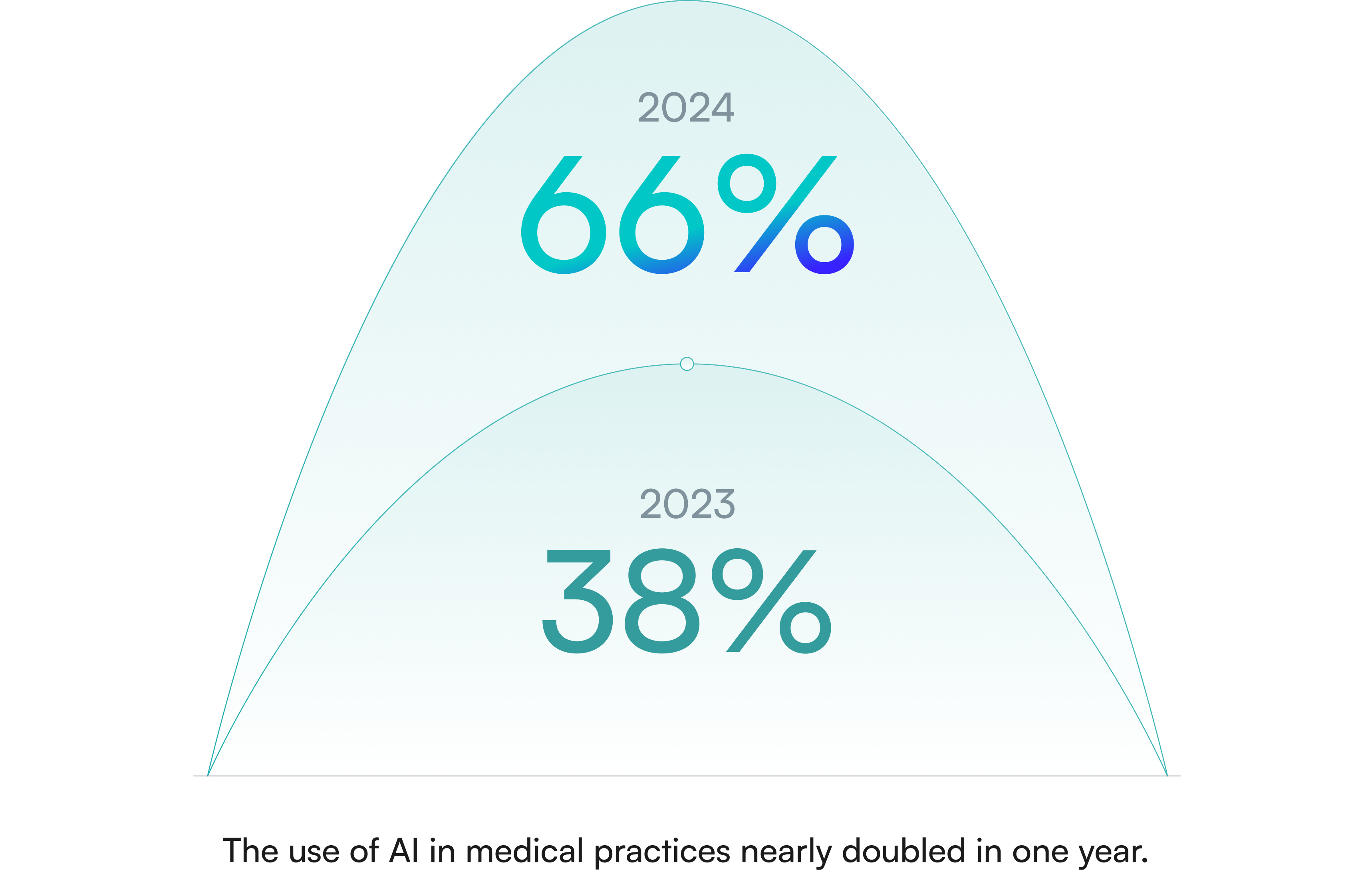

A Surge in AI Adoption

In just one year, the use of AI in medical practices has nearly doubled. In 2024, 66% of physicians reported integrating AI into their workflows, a substantial increase from 38% in 2023.

This rapid adoption underscores the growing recognition of AI's potential to streamline tasks such as visit documentation, inbox management, and care plan development.

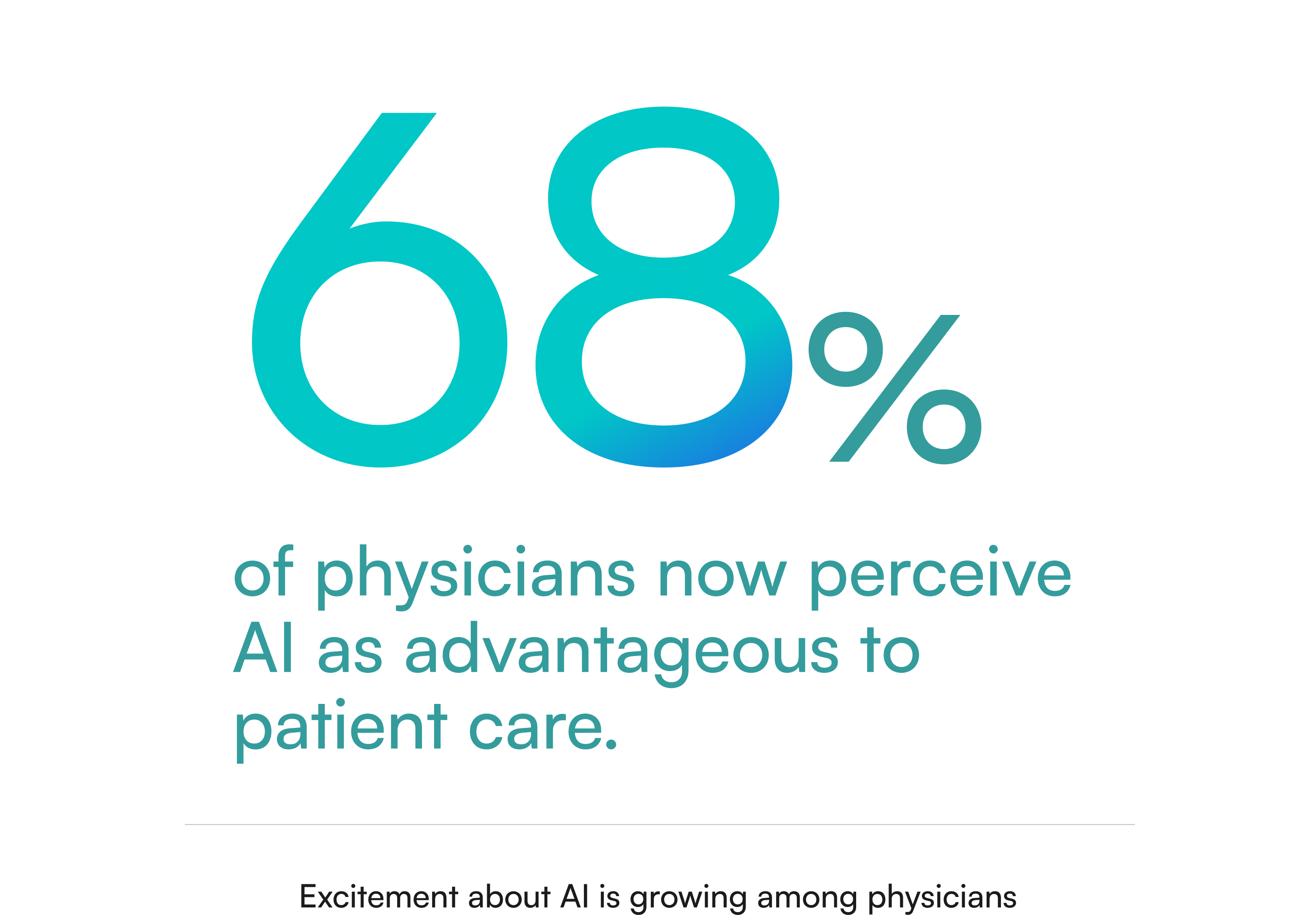

Evolving Sentiments Toward AI

Physicians' attitudes toward AI are becoming increasingly positive.

The AMA report highlights that 68% of physicians now perceive AI as advantageous to patient care, up from 63% the previous year. Moreover, 36% express more excitement than concern about AI's role in healthcare, indicating a growing trust in its capabilities. This shift is largely driven by firsthand experience. As physicians interact with AI tools in documentation, inbox management, and diagnostic support, skepticism is giving way to measured confidence. Many are beginning to see AI less as a disruptive force and more as a dependable extension of their clinical judgment.

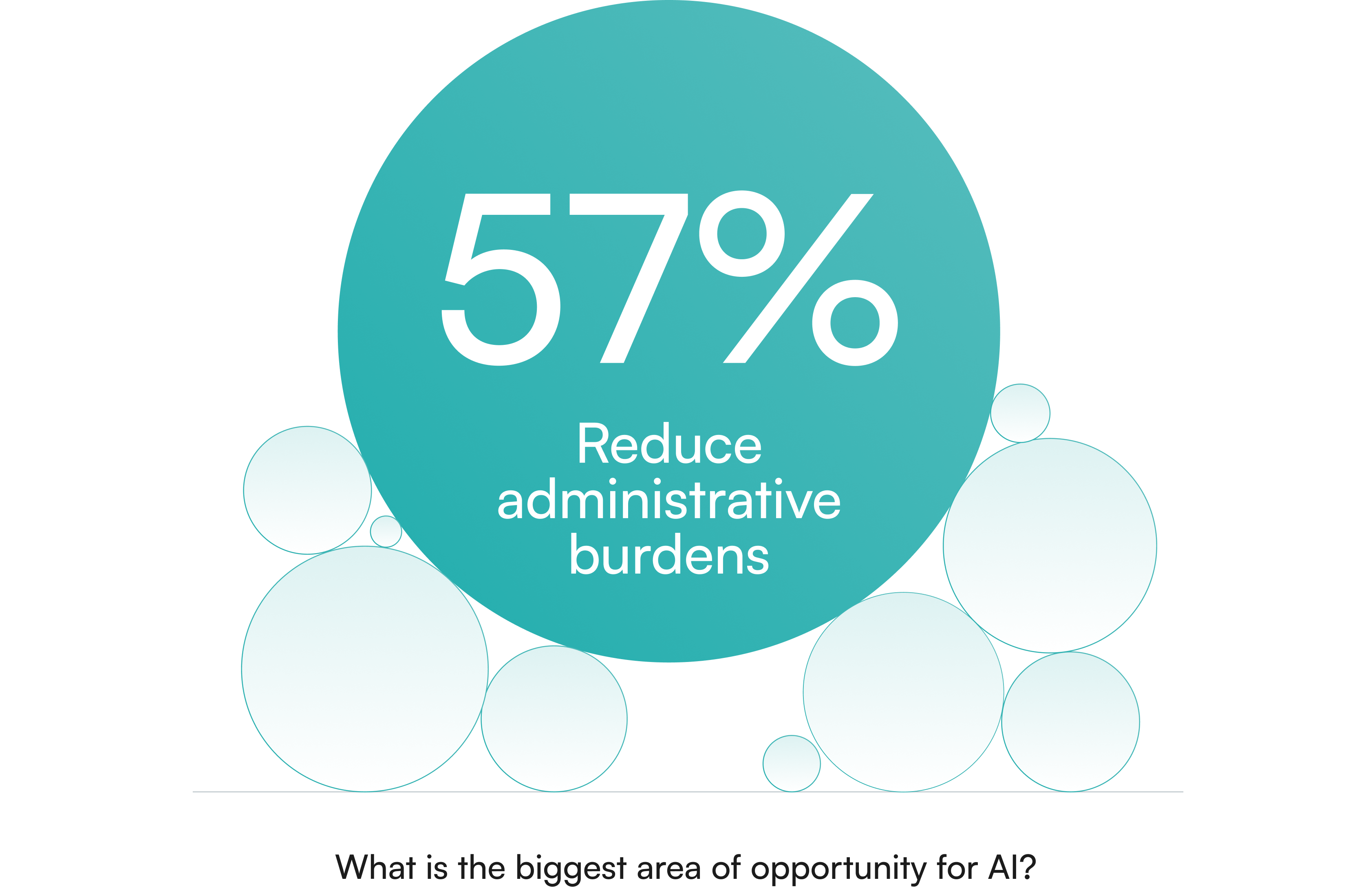

Addressing Administrative Overload

A significant 57% of physicians identify the reduction of administrative tasks through automation as the primary opportunity for AI in healthcare.

The emphasis is on leveraging AI to handle routine, time-consuming tasks, thereby allowing physicians to focus more on patient care.

This includes automating tasks like EHR documentation, billing/coding, prior authorizations, and triaging inbox messages. These tasks consume time and focus that could otherwise be spent on patient care or clinical decision-making. The administrative burden has become one of the strongest predictors of burnout, second only to workload volume. Reducing it helps preserve clinical energy and maintain capacity for patient care.

The message from the front lines is clear: help us with the tedious stuff. Every click saved and every note auto-drafted by AI is time given back to a physician and their staff.

Building Trust Through Integration and Oversight

Despite the optimism, physicians emphasize the need for AI tools to be seamlessly integrated into existing workflows, ensure data privacy, and provide adequate training.

Nearly half (47%) of the surveyed physicians advocate for increased oversight of AI-enabled medical devices to bolster trust and facilitate broader adoption. That desire for oversight reflects a healthy caution rather than resistance. Physicians understand the potential of AI but want clear accountability and safeguards that mirror the rigor of clinical practice.

Crucially, addressing administrative overload with AI requires doing it in a physician-friendly manner. Doctors have been burned by clunky tech before; they won’t tolerate an AI tool that creates new hassles or errors. Trust depends on reliability, transparency, and control—three qualities that determine whether AI feels like a burden or a benefit.

The AMA survey found that for physicians to embrace AI, it must integrate seamlessly with existing EHR workflows (84% called this essential), protect patient data, and allow physician oversight. Meeting these requirements demands the same rigor expected of any clinical system, adapted for a digital environment that’s already overloaded.

This is where thoughtful design is key. Successful solutions let physicians stay in control. For instance, Affineon lets each practice customize their lab result and prescription renewal protocols and patient message templates to fit their preferences, ensuring that the provider is always in control of how and when AI intervenes.

And by embedding directly into the EHR inbox, AI solutions like Affineon become part of the normal workflow (no new app or login needed).

The early results are encouraging: when administrative overload is intelligently automated, physicians feel the difference. They start to see an inbox that, for once, decreases in size by day’s end. They notice fewer trivial alerts interrupting their train of thought. The work feels smoother, less fragmented, and more sustainable.

They get home sooner (or actually take a lunch break) because the AI triaged the routine stuff. In short, the assistive AI is removing some of the “stupid stuff” (to borrow an AMA phrase) that has long plagued medicine (source).

Smart AI That Thinks Like a Clinician

As physicians continue to adopt AI, their expectations for these tools are also becoming more sophisticated. Doctors aren’t interested in simplistic algorithms that might misfire; they want “smart AI that thinks like a clinician.”

What does that mean? Fundamentally, it means an AI that understands clinical context, makes safe and evidence-based decisions, and augments the physician’s own thought process rather than disrupting it. The AMA survey noted that physicians value AI tools that are high-quality, validated, and transparent. In other words, tools that behave like a trained colleague. The goal isn’t to replace medical intuition but to extend it—supporting clinical reasoning with the depth and consistency of machine analysis.

One way Affineon and similar AI solutions strive to meet this bar is by embedding clinical reasoning into the AI’s workflow. For example, when Affineon’s agent triages lab results, it doesn’t just check if values are in the normal range. It performs a “deep read” of the patient’s chart, looking at trends over time and relevant history, much like a physician scanning the patient record before making a conclusion. This process transforms what was once reactive inbox management into proactive clinical insight. An AI agent like Affineon can read 10 years of historical data in three seconds. Something a physician simply doesn’t have time to do. If a lab result needs a doctor’s review, the AI provides a “smart summary,” including trends over time, surfacing critical insights while reducing cognitive load. This synthesis helps physicians regain cognitive bandwidth, focusing on the cases that truly need their expertise.

“It delivers depth, clarity, and no fluff, exactly how I need it,” one Affineon customer mentioned, noting that the AI’s notes felt tailored to her clinical mindset. That sense of alignment—of a tool that “thinks like I do”—is what separates useful automation from genuine augmentation.

Of course, an AI that thinks like a clinician must also act as a reliable team player. This is where issues of trust and safety come in. Physicians remain (rightly) cautious. They want to know that an AI won’t miss an important result or send the wrong message. Building trust requires transparency (show the doctor why the AI marked a result as normal) and fail-safes that loop the human in when judgment calls arise. Affineon tackled this by ensuring that any result outside of predefined normal parameters is escalated to the provider with clear flags and by providing a channel for feedback.

In fact, the AMA survey highlighted that 88% of physicians want a designated feedback channel to developers – they want to be heard if the tool isn’t working as expected. Incorporating frontline feedback has been crucial in refining these AI systems. Early physician users helped tune the Affineon algorithms, essentially teaching the AI to think more like they do. This collaborative iteration builds a sense of shared ownership in the technology. It transforms the AI from a black box into a transparent partner that learns from real clinical input, closing the loop between developers and end users. Instead of a black box, the AI becomes a tool that learns from the clinician, closing the loop between developers and end-users. When done right, the outcome is AI that feels “smart” in the same way a well-trained staff member is smart—anticipating needs, fitting in smoothly, and making the clinician’s load lighter.

One year into using Affineon’s AI inbox agent, an internist reported, “Affineon has reduced my lab review time from an hour every day to about 15 minutes.” But equally important, he said, “I trust it now. It hasn’t missed anything critical, and it’s like it knows how I think.” That shift—from reliance to trust—is what defines meaningful progress in healthcare AI. That trust is hard-won and easily lost, which is why continuous focus on safety and reliability is paramount. The AMA has been pushing for “increased oversight” and clear principles to guide AI in healthcare, precisely to ensure these tools enhance care without introducing new risks. Companies like Affineon have taken that to heart, pursuing rigorous validation and compliance (e.g. HIPAA, SOC2) to reassure both clinicians and health system leaders that the AI is safe, secure, and physician-aligned. The result is technology that feels less like software and more like a true clinical partner—one that extends the reach of care while respecting the clinician’s judgment.

Restoring Time to Care. And to Breathe.

Every physician will tell you that the joy of medicine is being with patients. Listening, diagnosing, teaching, healing… that’s the core of the calling. It’s the part of the job that feels meaningful, the part that drew most doctors to medicine in the first place.

Yet, bureaucracy often forces a zero-sum tradeoff: more time on paperwork means less time with patients. This is why the statistic from a recent survey is so striking: 89% of clinicians said they could spend more time with patients if they didn’t have to spend so much time on the inbox. Physicians want to give patients more attention, but their day is swallowed by messages and clicks. It’s an invisible tax on empathy—each click, note, and login gradually pulling focus away from patient care.

By unburdening the inbox, AI can liberate that time back to where it truly matters: with the patient. For healthcare leaders, this is a powerful rationale for embracing AI assistants. Restoring time to care isn’t just about schedules or workflows; it’s about rejuvenating the human connection in medicine and improving outcomes. When clinicians can focus fully, both care quality and morale rise. Patients sense it, staff feel it, and organizations benefit from a calmer, more efficient rhythm.

When doctors have more bandwidth, they can spend a few extra minutes in each visit, or simply approach their next appointment less hurried. Patients feel the difference: a doctor who isn’t rushing or glancing at the clock is one who can empathize and engage more fully. It’s telling that in systems that have implemented AI solutions, physician satisfaction rises hand-in-hand with patient satisfaction. Freed from drowning in electronic tasks, doctors are more present and patients get timely responses—a win-win that boosts morale on both sides of the stethoscope. It’s a reminder that every improvement in workflow is ultimately an investment in the patient experience.

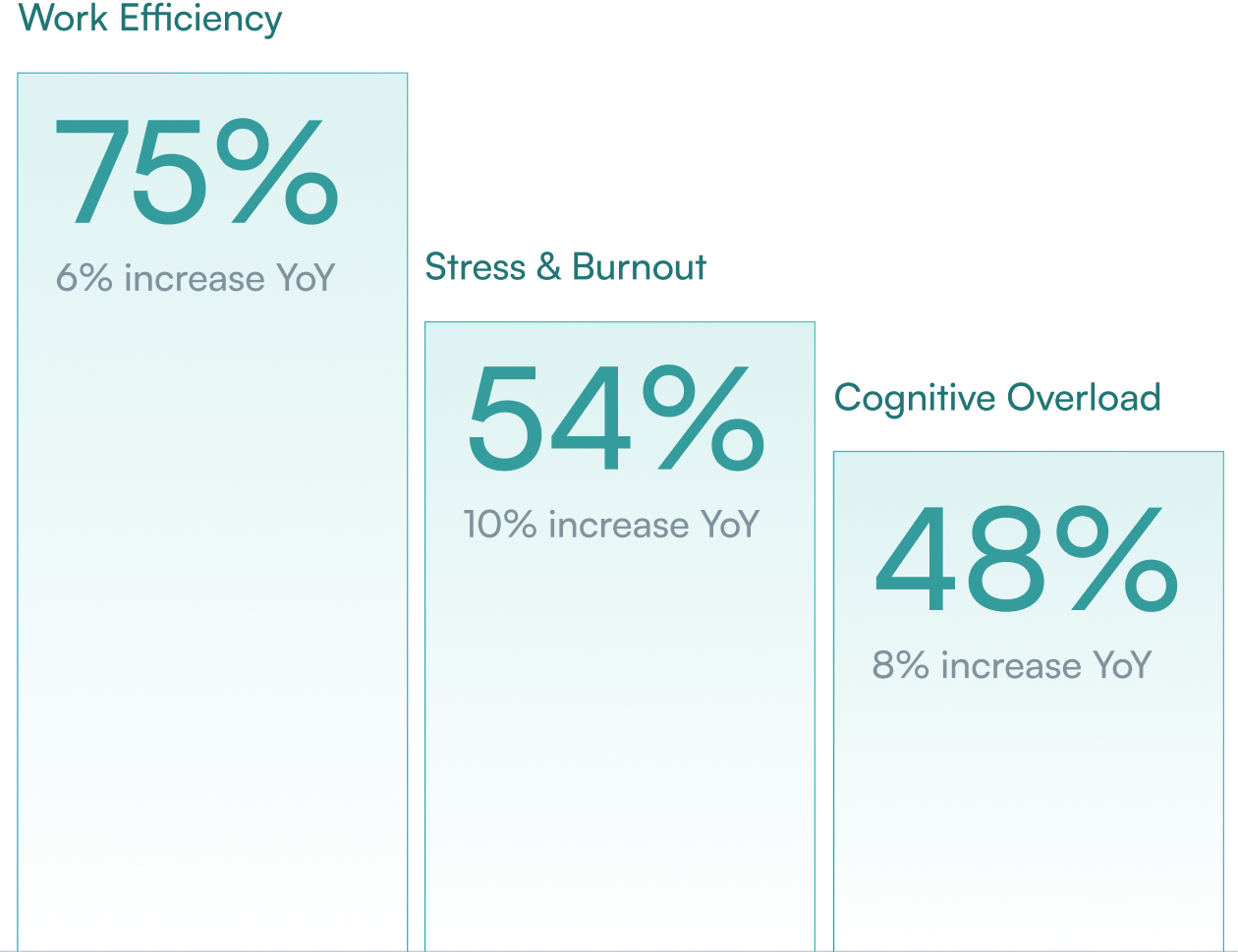

Beyond reclaiming patient time, there’s another equally important beneficiary: physicians’ own well-being. Burnout studies repeatedly cite “time pressure” and “chaotic environments” as major drivers of distress. By cutting 5 or more hours of busywork a week, AI can give clinicians something priceless—a breather. That pause can be the difference between finishing a day depleted or leaving with enough mental space to be present at home.

Consider the story of Dr. Megan (an OB/GYN), who used to spend Sunday evenings reviewing lab results from the prior week. She described the ritual: “I’d put my kids to bed, then open my laptop for two hours to go through results so I wouldn’t be overloaded Monday morning.” It was draining family time and mental energy. After her practice adopted an AI lab triage tool, Dr. Megan experienced a profound change. Normal results were handled automatically and patients got immediate reassurance, even on weekends. One Sunday, she realized she hadn’t checked her inbox at all – and nothing catastrophic happened. “I actually watched a movie with my husband without that nagging worry about the lab results,” she says. “For the first time in years, I felt free.” That freedom allows physicians to reclaim personal time without compromising patient care.

Such anecdotes highlight the emotional relief these technologies can provide. The constant background stress eases when you know an intelligent system has your back, catching the low-level issues. Doctors report feeling less guilt and anxiety about unanswered messages and more confidence that they can truly disconnect when they’re off duty. The impact extends beyond time savings to measurable reductions in stress and cognitive fatigue.

In the words of one physician user, “Affineon is the sanity-saving superpower every overloaded provider deserves. The platform gets me.” From a leadership perspective (CMOs, CMIOs, etc.), these human stories reinforce a business case: physician retention and wellness. A happier, less burned-out doctor is less likely to cut back hours or leave the organization. By investing in tools that restore joy and breathing room, healthcare executives signal to their teams that they value physician well-being. The AMA’s studies have even tied reduced burnout to better patient outcomes and lower organizational costs, creating a ripple effect of benefits. In sum, giving doctors time back isn’t a luxury – it’s increasingly a necessity to sustain the workforce and quality of care.

Conclusion

The AMA's findings reflect a pivotal moment in healthcare, where AI is transitioning from a novel concept to a trusted ally in clinical practice. As physicians continue to embrace AI, solutions like Affineon play a crucial role in transforming the healthcare experience—for providers and patients alike. The early outcomes are clear: responsible AI design is reducing operational strain and improving the day-to-day experience of care delivery. The next phase of progress will depend on keeping physicians at the center of innovation—building tools that learn from their expertise and evolve alongside the realities of modern medicine.